The psychologist Daniel Kahneman died March 27. He was one of the few thinkers who managed to influence thought in multiple fields, most notably psychology and economics, but also in international relations and many other spheres dependent on decision-making.

In 2011, Kahneman published his book Thinking, Fast and Slow, to universal acclaim. He had won the Nobel Memorial Prize for Economics in 2002, a striking event, given that he was a psychologist, not an economist. He was especially celebrated for his work on decision-making under conditions of uncertainty. He and his colleague Amos Tversky uncovered various biases and fallacies to which human beings are prone when making decisions.

Thinking, Fast and Slow Identified two basic “systems” of thinking for human beings when making decisions. System 1, the “fast” mode, for tasks that were well-understood and performed so often that little conscious cogitation was necessary; and System 2, the “slow” approach, used when the situation was unfamiliar or complicated.

“System 1 operates automatically and quickly, with little or no effort and no sense of voluntary control. …System 2 allocates attention to the effortful mental activities that demand it, including complex computations. The operations of System 2 are often associated with the subjective experience of agency, choice, and concentration.”[1]

Examples of System 1 thinking, from Thinking, Fast and Slow:

- “Detect that one object is more distant than another.

- “Orient to the source of a sudden sound.

- “Complete the phrase ‘bread and…’

- “Make a ‘disgust face’ when shown a horrible picture.

- “Detect hostility in a voice.

- “Answer to 2 + 2 = ?

- “Read words on large billboards.

- “Drive a car on an empty road.

- “Find a strong move in chess (if you are a chess master).

- “Understand simple sentences.

- “Recognize that a ‘meek and tidy soul with a passion for detail’ resembles an occupational stereotype.”

Examples of System 2 thinking included:

- “Brace for the starter gun in a race.

- “Focus attention on the clowns in the circus.

- “Focus on the voice of a particular person in a crowded and noisy room.

- “Look for a woman with white hair.

- “Search memory to identify a surprising sound.

- “Maintain a faster walking speed than is natural for you.

- “Monitor the appropriateness of your behavior in a social situation.

- “Count the occurrences of the letter a in a page of text.

- “Tell someone your phone number.

- “Park in a narrow space (for most people except garage attendants).

- “Compare two washing machines for overall value.

- “Fill out a tax form.

- “Check the validity of a complex logical argument.”

Nasim Nicholas Taleb’s “narrative fallacy,” which Kahneman cites as an influence, can be seen as an example of humans using “System 1” for “System 2” situations. When a situation seems to be superficially similar to one that has been encountered in the past, humans often go into System 1 mode, automatically mistaking what may in fact be a novel situation for something familiar, applying the previous explanatory “narrative” to it, and responding with a solution that may turn out to be inappropriate.

Kahneman obviously was a great thinker and his distinction between “System 1” and “System 2” is a notable advance. But I would suggest that there is an entire realm of thinking about decisions under conditions of fundamental uncertainty that he completely ignores. (Even geniuses can’t be expected to cover the entire gamut of reality.)

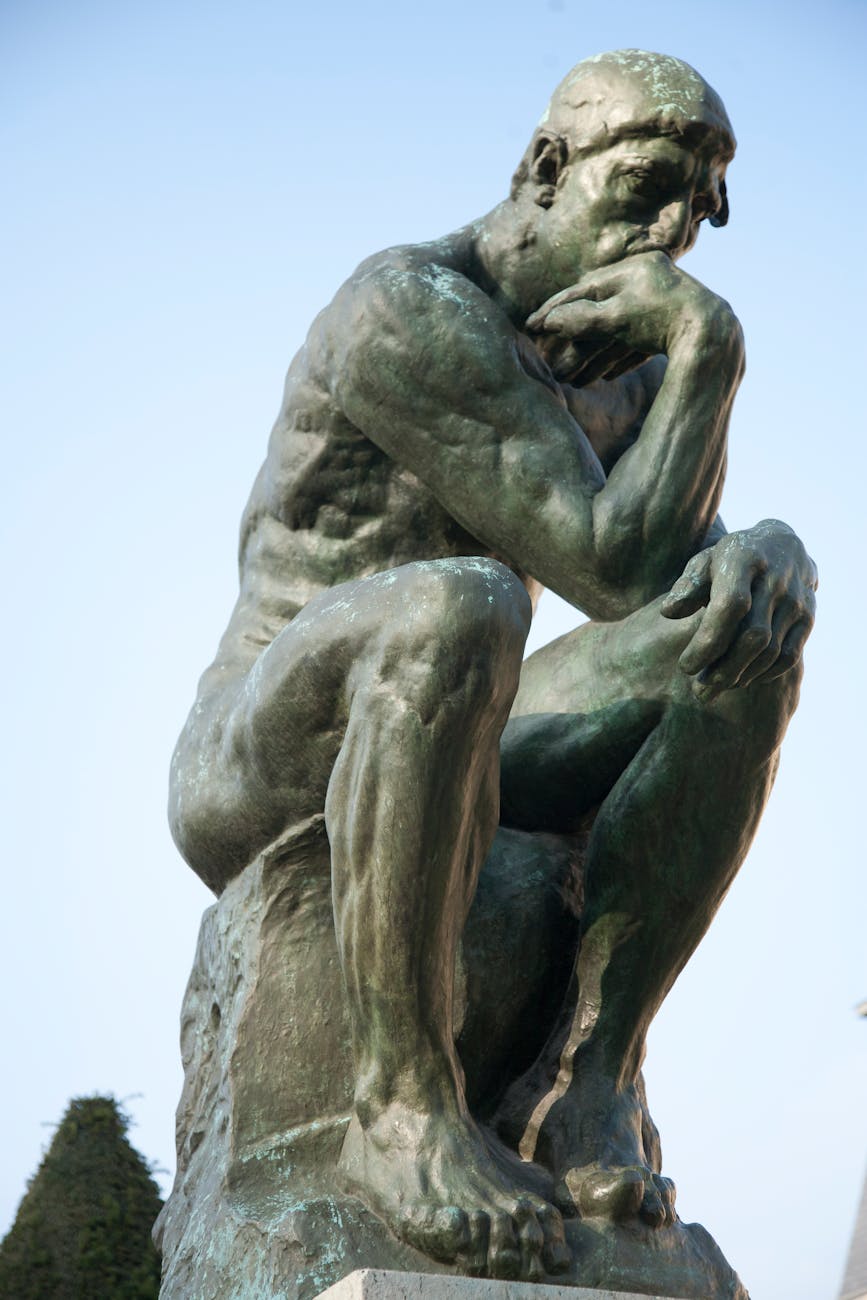

That “System 3” would be “imagination” or “creativity.” I would suggest that it was the “System” Daniel Kahneman was using when he thought up Systems 1 and 2. (In the alleged words of Einstein, “Imagination is more important than knowledge, because everything that is known first had to be imagined.”)

Examine all the examples shown above, for both Systems 1 and 2. In each case, there is a defined task. Which makes the kind of experimental science in which Kahneman was engaged a lot more tractable. You can measure the success or failure of the subject at each of these tasks. Either you park the car between the painted lines or you don’t. Either you maintain a fast walking speed or you don’t. Either you count the number of ‘a’s on the page correctly or you don’t.

But what do you do in a situation in which the level of uncertainty is so great that it is unclear what tasks need to be accomplished? I would posit that “System 3” is needed – imagination, creativity. In particular, what I would call “rigorous imagination” – taking a systematic approach to generating plausible future outcomes, and thinking deeply about them, irrespective of their probability.

What I am proposing is essentially a scenario planning approach to the future. This approach is often misunderstood. Kahneman himself appears to misunderstand it, when he writes:

“The most coherent stories are not necessarily the most probable, but they are plausible, and the notions of coherence, plausibility, and probability are easily confused by the unwary. …The uncritical substitution of plausibility for probability has pernicious effects on judgments when scenarios are used as tools of forecasting.”[2]

But scenarios should never be used as tools of forecasting. In fact, forecasting is either impossible or useless for the vast majority of actual strategic decisions leaders must make under conditions of uncertainty in the real world – as opposed to the academic laboratory. I believe Philip Tetlock, whose work Kahneman refers to extensively in Thinking, Fast and Slow, was thinking about this passage when he wrote, in an article co-written with a person (J. Peter Scoblic) who had previously written approvingly of our work for the U.S. Coast Guard, the following:

“…[H]aving too many different versions of the future can make it nearly impossible to act. Good scenario planning puts boundaries on the future, but those limits are often not enough for decision-makers to work with. They need to know which future is most likely. …[E]ven though a detailed narrative may seem more plausible than a sparse one, every contingent event decreases the likelihood that a given scenario will actually transpire. Nevertheless, people frequently confuse plausibility for probability, assigning greater likelihood to specific stories that have the ring of truth. They might, illogically, consider a war with China triggered by a clash in the Taiwan Strait more likely than a war with China triggered by any possible cause..”[3]

This passage betrays a fundamentally mistaken conception of scenario planning. If scenarios were about forecasting, what they point out would be a serious drawback. But they are not. Scenarios are not alternatives, one of which must “come true,” nor are they meant to be used in isolation. It is only as a carefully balanced set, rigorously differentiated, that they are of any use in planning. Individual scenarios are only intended, as Scoblic and Tetlock rightly imply, to provoke an arbitrary number of ideas about how the future could evolve, to provide raw material for a broader, cross-scenario synthesis, leading to a new strategic direction.

So the question of “which future is more likely” never arises. None of them are at all likely. They are merely artifacts, scaffolding (as my colleague Charles Thomas has put it), rigorously designed to facilitate creativity; once they have elicited the desired ideas about plausible future eventualities, they can be more or less be dispensed with, as a broader vision – completely unobtainable through forecasting – is derived from their results.

Pieces of each detailed scenario may well (indeed, almost always do) come to pass; an entire, detailed scenario never can, because each added detail, as Scoblic and Tetlock note, decreases the odds of it “coming true.” But making the scenario less detailed would lead to the sort of vagueness the authors rightly decry, defeating the purpose of the entire enterprise. The detail within each of the systematically varied scenarios provides the verisimilitude that provokes the creativity of those who go through the process, and allows them to suddenly envision ways in which their world could be very, very different.

All of these thoughts are provoked, in turn, by my reading of Tom Chivers’ just-published book Everything Is Predictable: How Bayesian Statistics Explain Our World. The appeal to all of these brilliant academic and journalistic minds of the rather cut-and-dried Systems 1 and 2, and their misunderstanding of the crying need to employ what to them must appear to be the messier, less objectively measurable “System 3” of rigorous imagination that I am proposing, are obviously explicable. It’s yet another facet of the Cult of Prediction.

Numbers are so seductive and seem so all-embracing. But the certainty they seem to provide is, as someone wrote somewhere, truly fatal.

[1] Daniel Kahneman, Thinking, Fast and Slow, https://books.apple.com/us/book/thinking-fast-and-slow/id443149884

[2] Daniel Kahneman, Thinking, Fast and Slow, https://books.apple.com/us/book/thinking-fast-and-slow/id443149884

[3] “A Better Crystal Ball,” (Foreign Affairs, November/December 2020), J. Peter Scoblic and Philip E. Tetlock